Security professionals develop and deploy signatures to detect and prevent malicious, suspicious, and anomalous patterns. These signatures, typically obtained through a vendor or created internally, are digital fingerprints that help identify and alert specific activity from occurring. They are then deployed to a security incident management to watch relevant system logs and flag if a pattern match is found.

While the lifecycle for a detection signature is dependent on the organization’s prioritization and resources, just as critical as the signature itself is assuring that the signatures would (1) function properly on deployment and, (2) continue to function properly throughout their deployment. This post provides a solution for utilizing synthetic events for the purpose of validating signature integrity and functionality, with the goal of achieving continuous assurance of a system’s detection signatures.

Assumptions in Detection

Detection signatures are used in detection platforms (such as Security Incident Event Management [SIEM] platforms) to identify patterns in system, application, and user logs. An analyst or researcher identifies a potential vulnerability or detection coverage gap, reviews how that gap can be identified with their tooling, drafts detection signatures, and deploys those signatures to that detection tooling. As such, the process of developing and deploying detection signatures at most organizations is (typically) as follows:

Figure 1: Typical Detection Signature Development Process

Although intelligence is reviewed and detection is ultimately deployed under this process, there are a few assumptions being made that may result in a lack of visibility in how the signature is performing in the tooling:

1. The signature logic is valid:

Does the deployed signature pattern match the reported intelligence?

If using a pattern-matching syntax (e.g., Regular Expressions), does the system fully support your pattern?

If you’re dealing with logical inclusionary/exclusionary statements, does order matter in the tool?

2. The detection and alerting pipeline works:

Is the syntax complicated or complex? If too complex, alerting or speed to alert may be affected.

How many system components exist between log generation and the eventual response? Do alerts go to a case management or awareness platform? Will that always work the same way?

3. The availability and integrity of logs is constant:

If your signature is looking for “Field X” on the day of signature deployment, but the log changes to where the field is now “Field Y” on another day, will that be accounted for?

Are all logs being properly scanned by the detection tooling?

There are additional assumptions that may be specific to an organization’s tools, logs, resources, and users, so it is important to review potential assumptions that may be providing a false sense of security. But what can be done about these assumptions? How can an organization increase confidence that the detection they deploy is functional not just today, but also tomorrow?

Developing Confidence

The detection process above (Fig. 1) should exist more as a cycle, rather than a linear process of development and deployment. By continuously revisiting detection, content authors will gain detection confidence, which can be defined as ensuring that the detection content: (1) matches the requirements of the tool (e.g., syntax, qualifiers), and (2) accurately performs developed actions upon a match (e.g., alerting).

To properly validate these requirements, one can develop a system for deploying synthetic events at a set frequency. Synthetic events are logs that are developed to match the logic for a related rule. The purpose of these events is to mimic that of an actual event that would match a signature’s detection logic, without needing to log actual (potentially malicious) activity, from occurring on a system. For example, if a detection signature was created to detect access to a specific URL, the system can submit a synthetic event into the system to spoof the alerting system into thinking the URL was “accessed.” The same data would be passed through the system; however, no actual physical/virtual access existed between the client and the URL.

Figure 2: Synthetic Event Definition

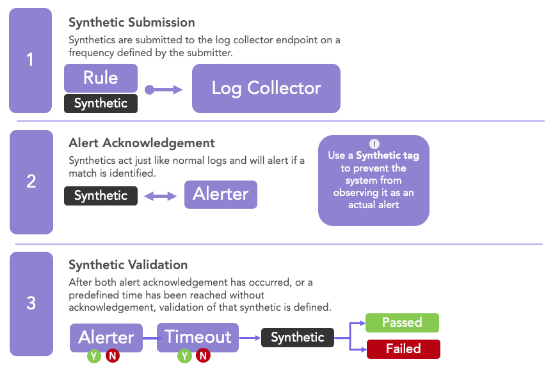

As these events are generated to test detection signatures, assuming the event matches the logic of the signature, the alerting engine should produce an alert. However, if one does not want to alert on these logs, each event can be provided a tag that can be ignored by the alerting engine. If the alerting engine comes across a synthetic event with this tag, the alerting engine can ignore the alert, or send it through a different pipeline (e.g., a silent pipeline that can provide feedback about synthetic event alerting) to test one or more components of the alerting pipeline (e.g., alerts go to a case management tool). Figure 3 provides a process flow for a potential synthetic event process:

Figure 3: Conceptual Synthetic Event Process

Lifecycle Assurance

As noted in the above section, the implementation of synthetic events can increase the detection confidence of signature developers and systems engineers. Depending on the implementation, the following benefits can be observed with synthetic events:

- Increased confidence in deployed rule logic

- Increased confidence in alerting pipeline

- Continuous assurance in rule logic

- Awareness of potential rule issues

- Awareness of potential log modifications

- Awareness of potential alerting or pipeline issues

- Identification of potential bugs in alerting system

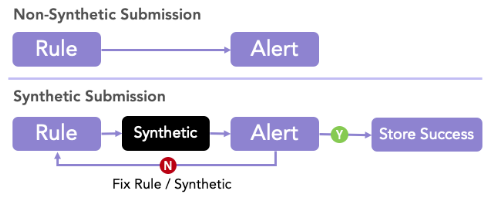

Figure 4 provides a basic understanding of the added benefit of the rule lifecycle with synthetic events added. If no synthetic events are implemented, we assume that the rule will alert, and when it does, it will alert on what it was designed to. Synthetic events allow for detection authors and engineers to actively tweak and observe the cycle, rather than passively set and forget.

Figure 4: Basic Synthetic Cycle Vs. Normal Alerting Flow

Conclusion

Testing has always been a critical keystone of software engineering, but the act of continuous testing for content such as detection signatures is not commonplace in detection engineering. One may assume that because they observed success in their system, e.g., a rule properly alerts), that the system is functioning properly. However, the system may be nonfunctional in specific areas, specific signatures, or specific times. By implementing a system of synthetic events, users will be able to increase confidence in not just the signatures they deploy, but also the systems they deploy on.